Home /

Expert Answers /

Electrical Engineering /

please-help-solve-the-resulting-answer-should-be-p-0-4-but-im-not-sure-how-to-attain-this-they-g-pa791

(Solved): Please help solve. The resulting answer should be p =0.4, but Im not sure how to attain this. They g ...

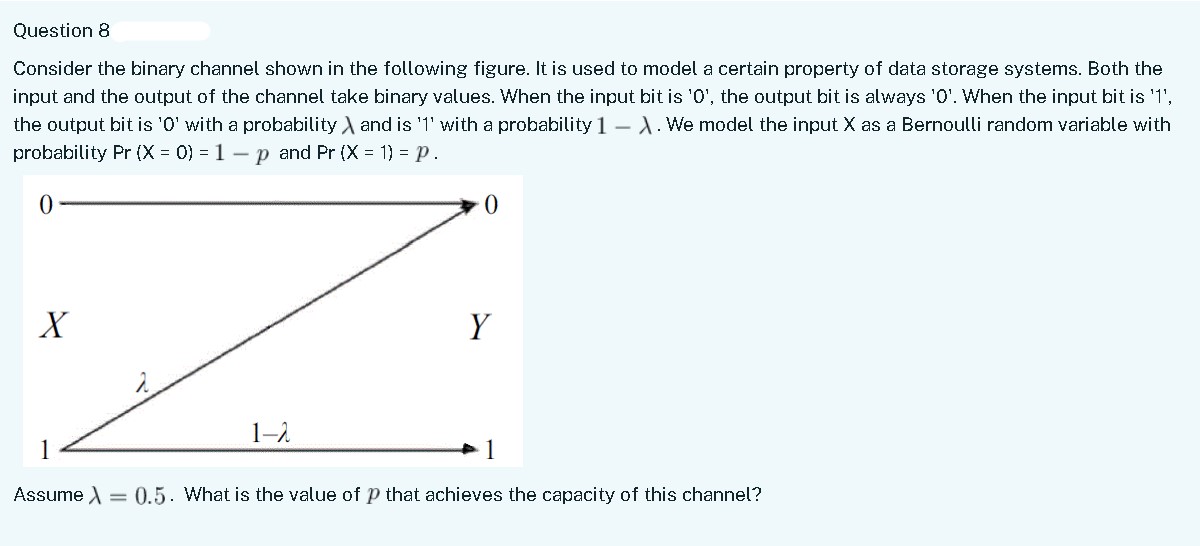

Please help solve. The resulting answer should be p =0.4, but Im not sure how to attain this. They give a hint saying: Hint: You may need to derive H(Y) = - [ 0.5p log(0.5p) + (1-0.5p) log(1-0.5p) ], H(Y|X) = p.

Consider the binary channel shown in the following figure. It is used to model a certain property of data storage systems. Both the input and the output of the channel take binary values. When the input bit is ' 0 ', the output bit is always ' 0 '. When the input bit is '1', the output bit is ' 0 ' with a probability and is ' 1 ' with a probability . We model the input as a Bernoulli random variable with probability and . Assume . What is the value of that achieves the capacity of this channel?

Expert Answer

Solution :We must maximize the mutual information I(X;Y), where X is the input random variable and Y is the output random variable, to determine the value of p that maximizes the capacity of the given binary channel.The source of the reciprocal information I(X; Y) is : where the conditional entropy of Y given X is H(Y|X), and H(Y) is the entropy of Y.