Home /

Expert Answers /

Statistics and Probability /

problem-1-suppose-a-random-sample-x-1-dots-x-n-bernoulli-p-with-0-lt-p-lt-1-find-the-maxi-pa381

(Solved): Problem 1. Suppose a random sample x_(1),dots,x_(n)Bernoulli(p) with 0<=p<=1. Find the maxi ...

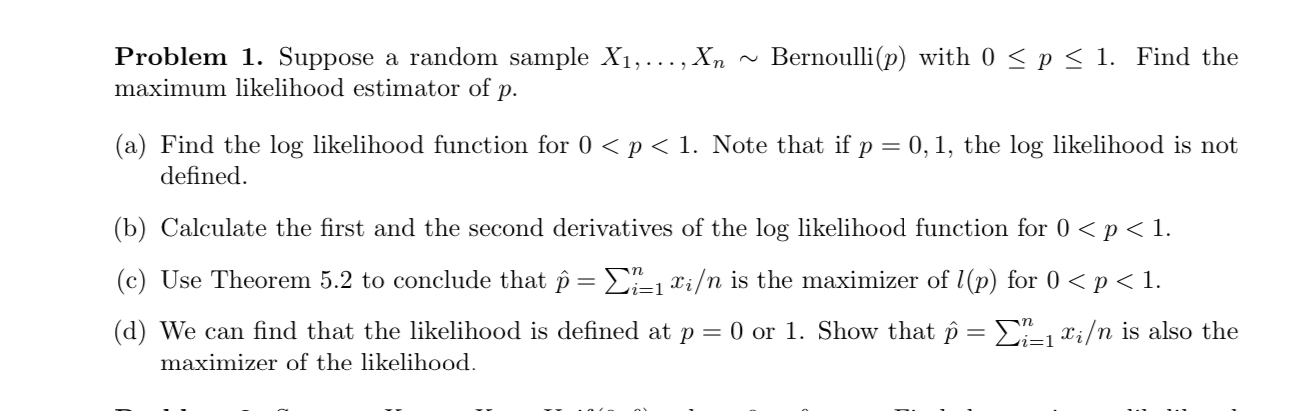

Problem 1. Suppose a random sample

x_(1),dots,x_(n)?Bernoulli(p)with

0<=p<=1. Find the maximum likelihood estimator of

p. (a) Find the

loglikelihood function for

p=0,1hat(p)=\sum_(i=1)^n (x_(i))/(n)l(p)p=0hat(p)=\sum_(i=1)^n (x_(i))/(n)0.

(d) We can find that the likelihood is defined at p=0 or 1 . Show that hat(p)=\sum_(i=1)^n (x_(i))/(n) is also the

maximizer of the likelihood.0.

(c) Use Theorem 5.2 to conclude that hat(p)=\sum_(i=1)^n (x_(i))/(n) is the maximizer of l(p) for 0.

(d) We can find that the likelihood is defined at p=0 or 1 . Show that hat(p)=\sum_(i=1)^n (x_(i))/(n) is also the

maximizer of the likelihood.0. Note that if p=0,1, the log likelihood is not

defined.

(b) Calculate the first and the second derivatives of the log likelihood function for 0.

(c) Use Theorem 5.2 to conclude that hat(p)=\sum_(i=1)^n (x_(i))/(n) is the maximizer of l(p) for 0.

(d) We can find that the likelihood is defined at p=0 or 1 . Show that hat(p)=\sum_(i=1)^n (x_(i))/(n) is also the

maximizer of the likelihood.