Home /

Expert Answers /

Statistics and Probability /

q19-suppose-the-correct-regression-model-is-given-by-hat-y-hat-beta-0-hat-beta-1-x-1-pa169

(Solved): Q19. Suppose the correct regression model is given by: hat(y)=hat(\beta )_(0)+hat(\beta )_(1)x_(1)+ ...

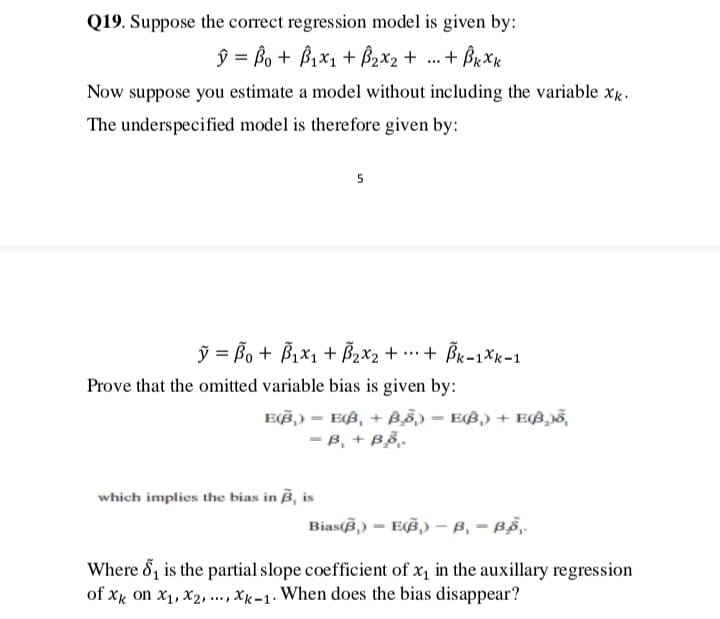

Q19. Suppose the correct regression model is given by:

hat(y)=hat(\beta )_(0)+hat(\beta )_(1)x_(1)+hat(\beta )_(2)x_(2)+dots+hat(\beta )_(k)x_(k)

Now suppose you estimate a model without including the variable x_(k).

The underspecified model is therefore given by:

tilde(y)=tilde(\beta )_(0)+tilde(\beta )_(1)x_(1)+tilde(\beta )_(2)x_(2)+cdots+tilde(\beta )_(k-1)x_(k-1)

Prove that the omitted variable bias is given by:

E(widetilde(\beta )_(1))=E(hat(\beta )_(1)+hat(\beta )_(2)tilde(\delta )_(1))=E(hat(\beta )_(1))+E(hat(\beta )_(2))tilde(\delta )_(1)

=\beta _(1)+\beta _(2)tilde(\delta )_(1).

which implies the bias in widetilde(\beta )_(1) is

Bias(widetilde(\beta )_(1))=E(widetilde(\beta )_(1))-\beta _(1)=\beta _(2)tilde(\Phi )_(1).Q19. Suppose the correct regression model is given by:

hat(y)=hat(\beta )_(0)+hat(\beta )_(1)x_(1)+hat(\beta )_(2)x_(2)+dots+hat(\beta )_(k)x_(k)

Now suppose you estimate a model without including the variable x_(k).

The underspecified model is therefore given by:

tilde(y)=tilde(\beta )_(0)+tilde(\beta )_(1)x_(1)+tilde(\beta )_(2)x_(2)+cdots+tilde(\beta )_(k-1)x_(k-1)

Prove that the omitted variable bias is given by:

E(tilde(\beta )_(1))=E(\beta _(1)+\beta _(2)tilde(\delta )_(1))=E(hat(\beta )_(1))+E(\beta _(2))tilde(\delta )_(1)

=\beta _(1)+\beta _(2)tilde(\delta )_(1).

which implies the bias in widetilde(\beta )_(, ) is

Bias(tilde(\beta )_(1))=E(tilde(\beta )_(1))-\beta _(1)-\beta _(1)tilde(\Phi )_(1).

Where tilde(\delta )_(1) is the partial slope coefficient of x_(1) in the auxillary regression

of x_(k) on x_(1),x_(2),dots,x_(k-1). When does the bias disappear?