Home /

Expert Answers /

Computer Science /

solve-the-problem-please-consider-a-markov-decision-process-mdp-with-state-transitions-and-utiliti-pa911

(Solved): SOLVE THE PROBLEM PLEASE Consider a Markov decision process (MDP) with state transitions and utiliti ...

SOLVE THE PROBLEM PLEASE

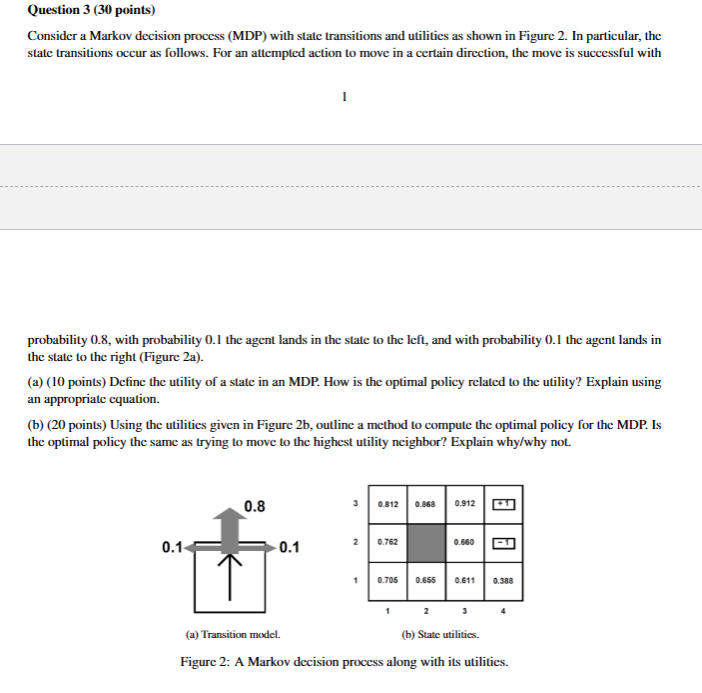

Consider a Markov decision process (MDP) with state transitions and utilities as shown in Figure 2. In particular, the state transitions occur as follows. For an attempted action to move in a certain direction, the move is successful with 1 probability 0.8 , with probability 0.1 the agent lands in the state to the left, and with probability 0.1 the agent lands in the state to the right (Figure 2a). (a) (10 points) Define the utility of a state in an MDP. How is the optimal policy related to the utility? Explain using an appropriate equation. (b) ( 20 points) Using the utilities given in Figure , outline a method to compute the optimal policy for the MDP. Is the optimal policy the same as trying to move to the highest utility neighbor? Explain why/why not. Figure 2: A Markov decision process along with its utilities.