(Solved): The goal of this problem is to compare GD, NAGD, and the least squares formula for computation. Re ...

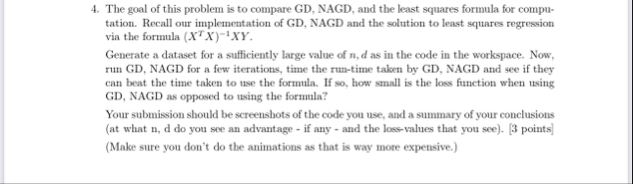

The goal of this problem is to compare GD, NAGD, and the least squares formula for computation. Recall our implementation of GD, NAGD and the solution to least squares regression via the formula

(x^(T)x)^(-1)xY. Generate a dataset for a sufficiently large value of

n,das in the code in the workspace. Now, run GD, NAGD for a few iterations, time the rum-time taken by GD, NAGD and see if they can beat the time taken to use the formula. If so, how small is the loss function when using GD, NAGD as opposed to using the formula? Your submission should be screenshots of the code you use, and a summary of your conclusions (at what

n,ddo you see an advantage - if any - and the loss-values that you see). [ 3 points] (Make sure you don't do the animations as that is way more expensive.)